There is a legend in computer science circles about the video game Quake. The legend tells of an unknown programmer who used a small software trick to remove a lot of repetitive function in the game’s graphics rendering. This small hack upended how graphics were done which upended all of compute and led to the destruction of some of the hottest companies of its day and arguably to the rise of the Internet and the Cloud. Could something like that happen in AI? Maybe.

The legend goes something like this. In the 1990’s the only way to do 3D graphics on a computer was to use very expensive workstations from companies like Silicon Graphics and Sun. The compute required was beyond the capabilities of any consumer grade device. To greatly oversimplify, a lot of the compute required for generating realistic looking graphics requires determining the distance between two points, say a hero’s sword and the torch shining on the wall. The traditional way to do this calculation is to revert to high school geometry and the Pythagorean theorem, which at some point requires solving the equation 1/square root of x. At the time, the way to solve this equation was essentially to guess it, and then repeat the math until getting close enough to right the answer. This is compute intensive, requiring the computer to run all those guesses for every pixel on a screen at the refresh rate – which works out to something like 30 million calculations a second times however many guesses it takes.

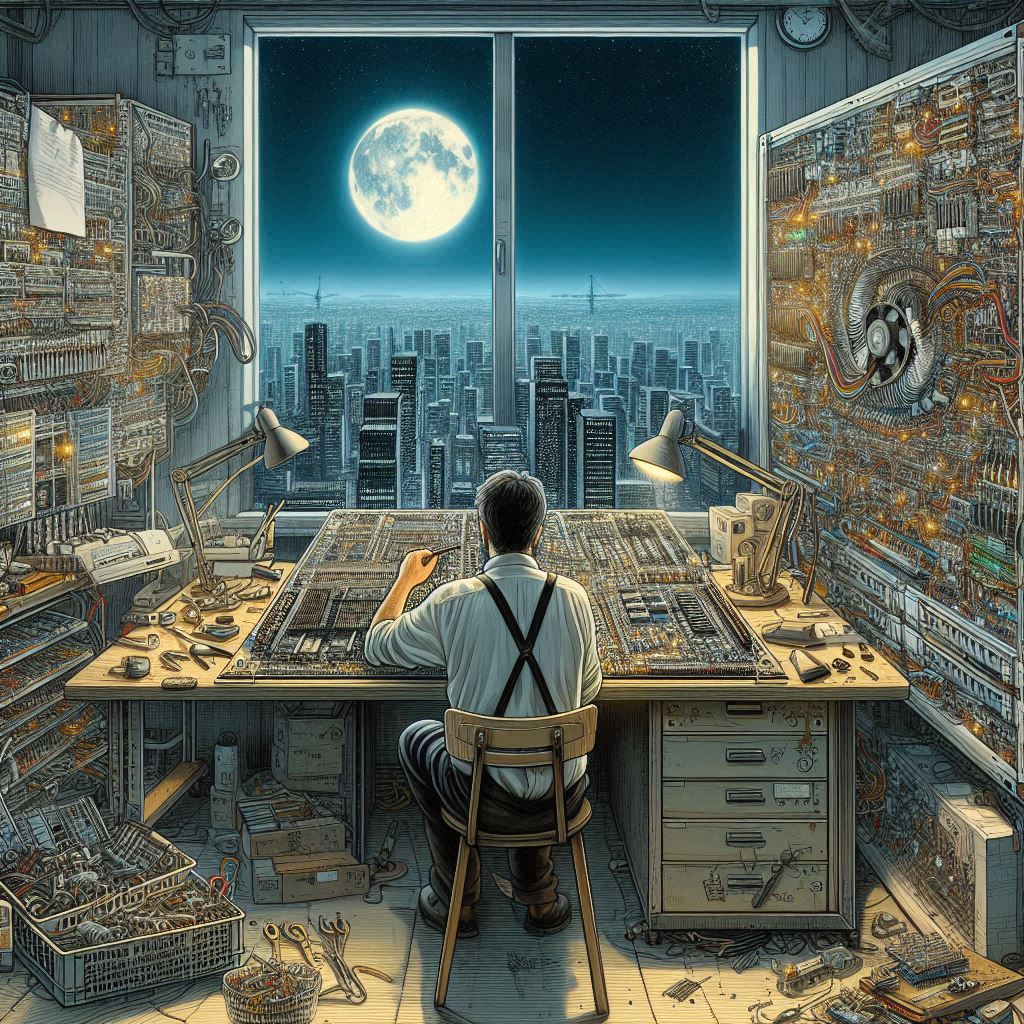

The one day, some genius at Id Software (maybe it was legendary coder John Carmack, but sources vary), found instead of running those inverse root loops, that by multiplying by an obscure constant, the computer could get a ‘close enough’ answer to the inverse square root equation, and basically save an order of magnitude of compute. This meant that consumer PCs (with a decent Nvidia graphics card) could render 3D graphics. Quake would go on to be a major hit, in large part due to its revolutionary graphics. Silicon Graphics and their peers rapidly lost their dominance in the market. This also opened up the space for a transition from workstations to servers which of course leads to the Internet and the Cloud, to say nothing of all the games and videos we run on consumer devices today. This is the power of software – the ability to make small, smart changes and produce outsized results.

We were reminded of this recently when we came across an academic paper recently. The snappily named “Scalable MatMul-free Languag Modeling” by Zhu, Zhang, Sifferman et al. The authors created a new software system for running AI inference that essentially eliminates the need for matrix multiplication (thus MatMul-free). It may be hard to grasp how big a deal this could be. Matrix Multiplication is the heart of what we call “AI”. When overwhelmed with AI hype and media exposure we have been known to revert to describing AI as just fancy matrix multiplication. If we could run AI models without the use of matrix multiplication it would shake up the entire AI complex in a manner similar to what the Inverse Square hack did to the compute industry of its day. There is some great irony here in that Nvidia’s very existence depended on that revolution of graphics from the late 1990’s which drove their business for years.

Does Zhu, Zhang, and Sifferman’s approach really allow us to eliminate matrix multiplication? We have no idea. The math in the paper is beyond any ability conveyed by the C+ we got in linear algebra marking the end of our college math course. Essentially, the team replaced matrix multiplication with several advanced forms of ‘quantization’, or degrading the precision of the calculation. Meaning they found a way to get ‘good enough’ results. Other software teams have used various forms of quantization before, it is all over the place. What stands out here is the fact that they were able to get their results by running their software on an off-the-shelf FPGA part which costs a fraction of an Nvidia H100 and uses a tenth of the power.

If this works it would wreck a lot of companies, starting with Nvidia. But to be very clear that is a giant “IF”. This approach undoubtedly has drawbacks and trade-offs which need to be explored. Our point here is not to say that this is the solution, but just to draw attention to the fact that there could be a solution out there that would present a radical change to a radically changing industry. We all know this is possible. (That being said, if the team behind the paper is interested in raising money to commercialize this approach feel free to drop us a line.)

Leave a Reply